Scenario: I have 7 hosts connecting to an Equallogic Group (2 x 6000s). There are 3 LUNs visible to each host and locked down to specific ISCI IP range. ( eg 172.17.16.0/24). All 6 hosts could see all 3 luns but one 1 host could only see 2 of the 3 LUNs available. The protocol is iSCSI and I was using the software initiator on each host.

Upon discovering this I placed the affected ESXi host in maintenance mode.

I then started troubleshooting:

# esxcfg-scsidevs -m

naa.xxxxxx:1 /vmfs/devices/disks/naa.xxxxxxx:1 xxxxxxxx-xxxxxxxx-xxxxxxxxxxxx 0 LUN1

naa.zzzzzz:1 /vmfs/devices/disks/naa.zzzzzzz:1 zzzzzzzz-zzzzzzzz-zzzzzzzzzzzz 0 VCLOUD2

naa.yyyyyy:1 :1 yyyyyyyy-yyyyyyyy-yyyyyyyyy 0 VCLOUD3

Checking the vmkernel.log showed:

2015-03-04T15:31:59.543Z cpu2:16386)ScsiDeviceIO: 2316: Cmd(0x4124037f6d40) 0x2a, CmdSN 0x3af14 from world 17304 to dev “naa.yyyyyyyy-yyyyyyyyyyyyy-yyyyyyyyyyyy” failed H:0x1 D:0x0 P:0x0 Possible sense data: 0x5 0x20 0x0.

vmkwarning logs:-

2015-03-04T14:44:44.632Z cpu0:4886104)WARNING: Vol3: 1717: Failed to refresh FS 53a19388-b2d9b366-365a-0024e83f4f68 descriptor: Device is permanently unavailable

Looking at the VMware KB1029039 0x1 means “This status is returned if the connection is lost to the LUN. This can occur if the LUN is no longer visible to the host from the array side or the physical connection to the array has been removed”

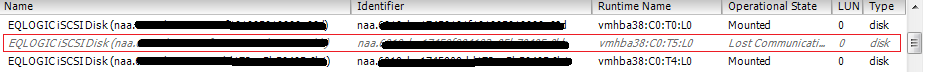

This suggests there was an APD (All Paths Down) situation at some point, possibly due to a SAN or physical issue problem. I believe neither had occurred recently. Luckily no VMs on that host were actively using the datastore so no downtime was incurred. The flipside of this was that I had no idea when this first started happening as it could have been down for months!

The resolution was to reboot the host.

You must be logged in to post a comment.